Launching the first autonomous taxi service in Japan

See how Tier IV worked with Hexagon | AutonomouStuff to combine the technology necessary to deploy an autonomous taxi.

Company: Tier IV, a tech start-up and lead developer of Autoware open-source software for autonomous driving, based in Japan

Challenge: Choose and implement the right autonomous driving tech stack to launch the first autonomous taxi service in Japan

Solution: Collaborate with AutonomouStuff to outfit a vehicle with a drive-by-wire (DBW) system

Result: Successful demonstration of public automated driving tests in winter of 2020

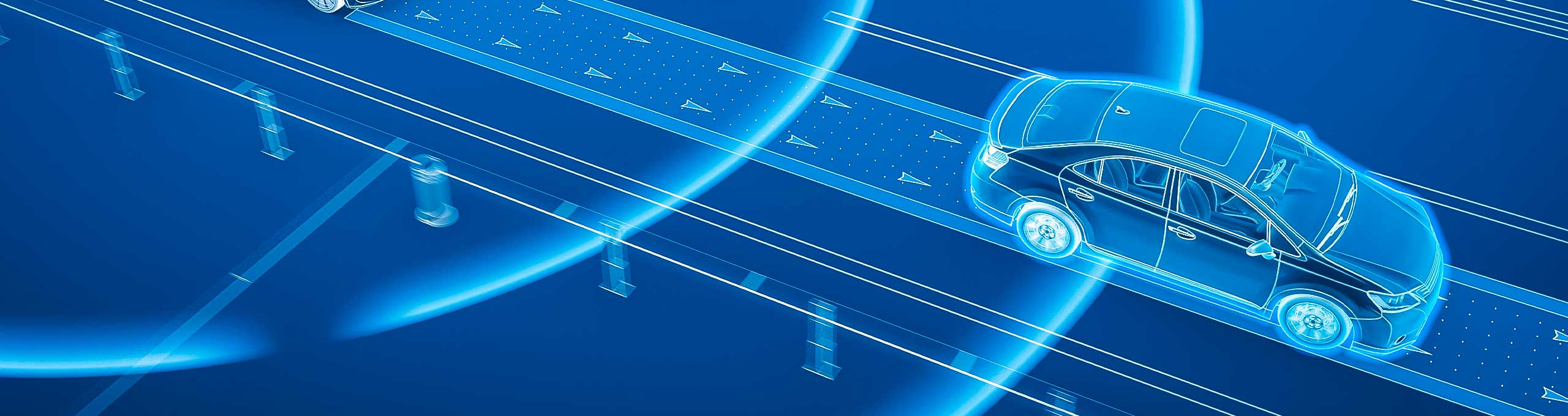

Many hands at several companies took an active role in getting the Robotaxi onto Tokyo’s busy streets. Chief among them are Tier IV, the originator and manager of the project; Hexagon | AutonomouStuff, which designed and installed the drive-by-wire system that directs taxi navigation and manoeuvering; and the Autoware Foundation, repository of many of the software stacks employed to transform sensor data into taxi commands.

As windy autumn curled into frigid winter on the streets of Tokyo, curious crowds gathered at select street corners in the busy Shinjuku district. Holding up their phones and tablets to film a small, black, well-accoutered taxi with two smiling passengers in the back seat, they marvelled and pointed out to each other that no one sat behind the steering wheel. Yet the taxi rolled smoothly out of the luxury hotel’s parking lot onto the busy street and away in traffic.

Autonomous driving had made its auspicious debut in one of the busiest metropolises in the world. The successful late-2020 tests of the Robotaxi were designed to check the safety, comfort and punctuality of the self-driving car, an increasingly likely conveyance of the future: The very near future. The companies involved hope to put such taxis into actual street operation in 2022 or soon thereafter.

Among many comments collected by the Tokyo press from more than 100 passengers who took the driverless rides, the vice governor of Tokyo said, “I felt safer than when I was in my friend’s car. It was a comfortable ride.” Clearly, this was a significant milestone for safer mobility in Tokyo and for autonomous vehicle technology all over the world.

Installing the controls

A drive-by-wire (DBW) system complements or replaces a vehicle’s mechanical controls such as the steering column, brake pedal and other linkages with electromechanical actuators that can be activated remotely or autonomously. In the current version of the Robotaxi, the DBW system sits alongside the traditional mechanical linkages so that — for the present at least — a human safety operator can retake command of the test vehicle if necessary.

“The scope of our work for the Robotaxi was installing our drive-by-wire system for the Toyota vehicle in the Japanese market,” says Lee Baldwin, segment director, core autonomy at Hexagon’s Autonomy & Positioning division.

“We took delivery of the Tier IV taxi at our headquarters in Morton, Illinois. We had to develop an interface to the onboard systems so we could provide a DBW system to Tier IV. ”

The Platform Actuation and Control Module (PACMod), a proprietary system designed and built by Hexagon AutonomouStuff engineers, provides precise by-wire control of core driving functions and ancillary components. PACMod controls by-wire the accelerator, brakes, steering and transmission. It also sends commands to the turn signals, headlights, hazard lights and horn.

It has a controller area network (CAN) bus interface and collects vehicle feedback for subsequent analysis, which is particularly important in the R&D vehicles for which it is primarily designed: factors such as speed, steering wheel angle, individual wheel speeds and more.

Finally, it has in-built safety design with intuitive safety features, such as immediate return to full manual control in urgent situations. This qualifies it for road approval in Europe, the U.S. and Japan.

AutonomouStuff engineers installed the DBW and the speed and steering control (SSC), then shipped the Robotaxi back to Japan. Tier IV took it to their shop and installed the many sensors and the company’s own proprietary version of Autoware.

“It takes a large number of partners to implement and deploy an autonomous technology. The sensors, the LiDARs, the cameras, the ECUs running the software, all these have to come together.”

Implementing the brains

Autoware consists of modular, customizable software stacks, each with a special purpose within the autonomous vehicle. At its top level, control, it formulates the actual commands the system gives to the actuators via the DBW system to achieve what the planning module wants the vehicle to do to get from point A to point B. It includes modules for perception, control and decision-making. Its architecture makes each function an independent module, easy to add, remove, or modify functionalities based on particular project needs.

Autoware supplies and supports the world’s largest autonomous driving open-source community, developing applications from advanced driver assistance systems (ADAS) to autonomous driving. The software ecosystem is administered by the non-profit Autoware Foundation, which has more than 50 corporate, organizational and university members. Tier IV and AutonomouStuff are members and core participants of the foundation.

“Autoware is used in projects in the U.S., Japan, China, Taiwan and Europe,” says Christian John, president of Tier IV North America. “All the learning, testing, debugging, all that experience comes back into the open-source platform. Everyone benefits from those enhancements.

“It takes a large number of partners to implement and deploy an autonomous technology. The various sensors, the LiDARs, the cameras, the ECUs running the software, all these have to come together to implement autonomy.”

AutonomouStuff and Tier IV have worked together since early 2020 in a strategic partnership to create, support and deploy autonomy software solutions around the world and across a variety of industries.

Demonstrating the technology in real-world scenarios

In November and December 2020, Tier IV and its partners conducted a total of 16 days of public automated driving tests in Nishi-Shinjuku, a busy commercial centre in central Tokyo. Government officials and members of the public were recruited as passengers to ride and comment on routes that ranged from 1 to 2 kilometres in traffic. On some days, a safety driver sat behind the wheel, ready to take control if something unexpected happened (it never did). On others, the driver’s seat was empty; a remote driver monitored screens showing the vehicle’s surroundings and progress, ready to assume remote control.

The November tests ran along a single predetermined route. The December tests allowed participants to choose among three different departure and arrival points on their smartphones, summon the taxi to them and then ride it to their desired destination. Therefore, the vehicle had to compute and decide among many potential routes, making the implementation more challenging.

A total of more than 100 test riders participated. It was an intensive learning experience for the designers and operators of the Robotaxi, as some of the Tier IV engineers subsequently recounted in online blogs about the demonstrations. The challenging environment and conditions of Nishi-Shinjuku —heavy traffic, many left and right turns, lane-change decisions and more — combined to fully test Robotaxi’s capabilities.

One of the unexpected lessons learned concerned false detection of obstacles. High curbs on some roadsides and even accumulations of leaves in gutters created problems for the perception system. Autoware is programmed to recognize what must be detected, such as automobiles and pedestrians, and to distinguish these objects from others such as rain or blowing leaves, which can be ignored. However, this remains a work in progress. Differentiating between falling leaves and objects falling off the back of a truck is not as easy for Robotaxi as it is for human eyes and brains.

Another problem indicating future work to be done was the performance of unprotected turns at non-signalled intersections, when either the view of oncoming traffic was obscured (something that calls for a good deal of human judgment and quick reaction) or the rate of approach of the oncoming traffic was difficult to estimate.

To compound this, Autoware is programmed not to accelerate suddenly to take advantage of a gap in traffic as a human might do; the comfort and ease of passengers has a value. Such balances of conservativeness and aggressiveness—so natural to humans—can be difficult to achieve in a programmed system in heavy traffic.

The LiDAR sensors also experienced occasional difficulty in environments without distinctive features, such as open park areas and tunnels. Further, the relatively high expense of LiDAR sensors may create difficulties at mass-market scale, when many vehicles need to be outfitted.

To solve this, some Tier IV engineers blogged that they are experimenting with a technique called Visual SLAM, using a relatively inexpensive camera coupled with an inertial measurement unit (IMU) in place of a LiDAR sensor. This creates a map using visual information and at the same time estimates its own position in the map. In addition, a technology called re-localisation, which estimates where you are in a map created in advance, is being actively researched.

But Visual SLAM has its own challenges: it does not operate well in darkness, nor with many simultaneously and divergently moving objects.

Scaling for the future

Nevertheless, Tier IV and AutonomouStuff relish the challenges.

“A lot of innovation is happening in this space,” John says. “The OS (Open Source) allows many players to bring their solutions into the ecosystem: cost, power consumption, safety architectures—these efforts are bringing in the best-of-class solutions and players.”

The fast-developing driverless vehicle market features a lot of players and many variants of sensor combinations and integrations; some are expensive, some less so.

“Other companies are very vertically integrated,” John adds, “developing their own software stacks, as opposed to our approach to open source. The market is still pretty early from the standpoint of mass deployment adoption. Some players have been able to demonstrate full Level 4 and deploy in limited markets.”

But at the same time, to really scale up their approaches, there’s another significant round of system optimisation that needs to occur. One thousand watts plus of computer power in a car and sensor integration of $100,000 USD per vehicle: this just doesn’t scale to tens of thousands of vehicles across many cities.

“That’s why there’s now all this investment: solid-state LiDAR, imaging radar, all these things that continue to advance perception capabilities. This means new solutions must be integrated and optimized within our perception stack. Then, once you’ve made these changes, how do I verify my new system? How do I demonstrate that it still meets safety requirements?”

John has final words for the future. “To me, it feels like everybody has demonstrated they can make the software work in limited deployments. To scale, it will take another significant investment to redesign and validate the systems, and open source will play a significant role here in optimizing next-generation AD solutions.”