Sensor fusion for positioning solutions

Enabling autonomy through sensor fusion.

Sensor fusion, at its most basic level, is about combining different sensor measurements together, whether that be GNSS, inertial, photogrammetry, LiDAR/RADAR and others.

We'll explain how this is achieved and the ways it enables autonomous applications.

Overview

Why sensor fusion?

Sensor fusion involves the complicated process of using algorithms to fuse together measurements from many different sensors. The result is a more accurate, reliable and safe output position that we can use in autonomous applications.

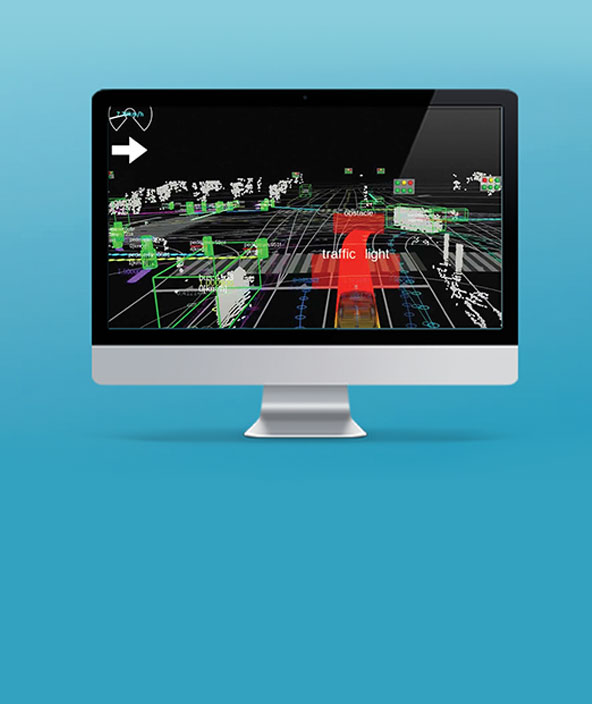

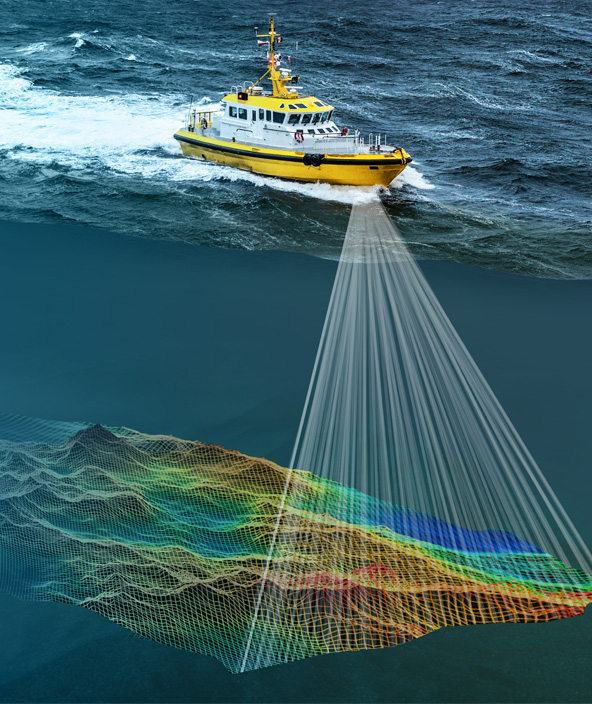

For example, Global Navigation Satellite Systems (GNSS) like the Global Positioning System (GPS) provide measurements that can be used to determine a position on the Earth. Inertial measurements from an Inertial Measurement Unit (IMU) can be used to determine a platform's velocity, acceleration, and attitude – its heading, roll, and pitch. Perception sensors like photogrammetry, cameras, LiDAR and RADAR measure a platform's surroundings, and can be used to identify nearby objects or hazards, including road signs, pedestrians or objects to interact with.

How does sensor fusion enable autonomy?

An autonomous system relies on sensor fusion to understand where it is in the world, what hazards are near, how should it interact with which objects and more. Without sensor fusion algorithms and the perception or positioning technologies supplying data, autonomous applications would not be possible.

Explore

$ProductName

$SustainabilityImpactLabel

$SustainabilityCTABandHeadingLabel

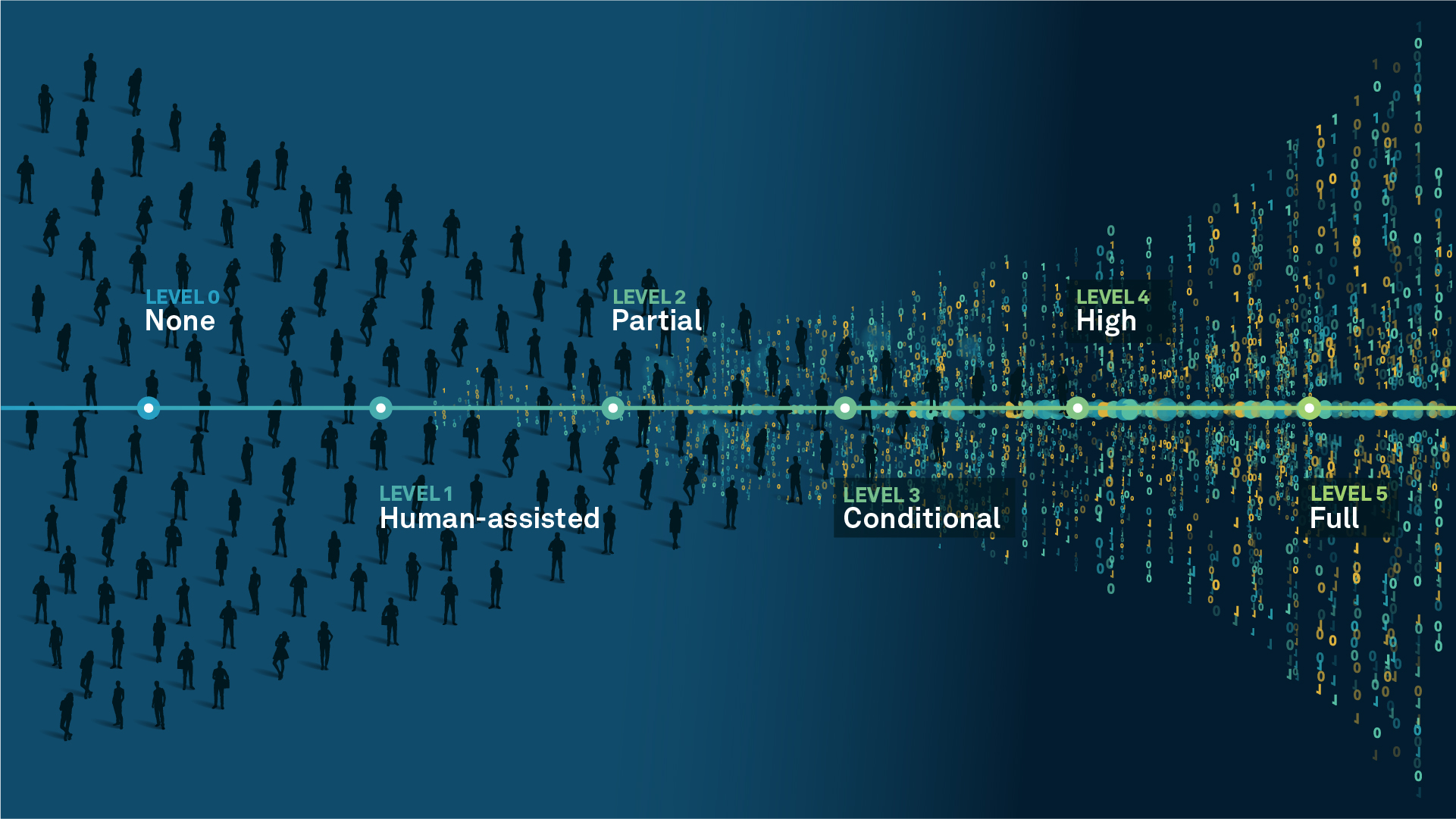

We’re climbing the automation curve

Our transition to Intelligent automation is accelerating. Ultimately, our innovations will give rise to new technologies and applications - many of which we’ve yet to imagine. Today, every Hexagon solution is mapped and tagged according to its level of automation, so customers can clearly track our progress towards the freedom of autonomy.

Human-driven

All tasks completed by human labour; no data is leveraged across the operation.

Human-assisted

Labour is primarily conducted by a human workforce. Some functions have been automated to simplify control.

Partial automation

Some tasks are automated for short periods of time, accompanied by occasional human intervention.

Conditional automation

Human workforce is used for intervention as autonomous operations begin to increase productivity.

Highly autonomous

Autonomous systems complete required tasks within specific bounds, unleashing data and building smart digital realities.

Full autonomy

A smart digital reality™ enables autonomous systems to complete all tasks without human intervention.

Land

Products that make an environmental impact in forest monitoring, material reusability, farming or water usage.

Air

Products that make an environmental impact in renewable energy, noise pollution elimination and e-mobility.

Water

Products that make an environmental impact in saving our oceans, reducing pollution and increasing access to clean water.